Get started with NER models¶

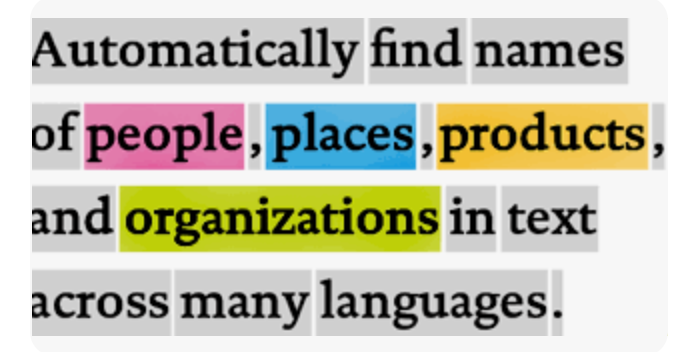

Named Entity Recognition (NER) is a vital task in NLP that involves identifying and extracting named entities from text. It has many real-world applications, such as information extraction, question answering, and sentiment analysis. Pre-trained NER models are available from popular NLP libraries like Spacy, Hugging Face, and NLTK.

In this example, we’ll demonstrate how to use these pre-trained models to perform NER.

text = "Apple is looking at buying U.K. startup for $1 billion"

Spacy – NER¶

The code uses the spacy library to extract named entities from text. It loads a pre-trained English language model called "en_core_web_sm", and uses it to process the text. It then extracts the named entities from the resulting doc object containing the text of each named entity and its label.

import spacy

nlp = spacy.load("en_core_web_sm")

doc = nlp(text)

spacy_entities = [(ent.text, ent.label_) for ent in doc.ents]

print("Spacy NER:", spacy_entities)

Spacy NER: [('Apple', 'ORG'), ('U.K.', 'GPE'), ('$1 billion', 'MONEY')]

Hugging Face – NER¶

This loads a pre-trained model called "dbmdz/bert-large-cased-finetuned-conll03-english" and its corresponding tokenizer. It then creates a pipeline using the pipeline() function from Hugging Face, specifying the "ner" task and passing in the loaded model and tokenizer.

from transformers import pipeline, AutoModelForTokenClassification, AutoTokenizer

model_name = "dbmdz/bert-large-cased-finetuned-conll03-english"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForTokenClassification.from_pretrained(model_name)

hf_ner = pipeline("ner", model=model, tokenizer=tokenizer, framework="pt")

hf_entities = hf_ner(text)

hf_entities = [(ent["word"], ent["entity"]) for ent in hf_entities]

print("Hugging Face NER:", hf_entities)

Hugging Face NER: [('Apple', 'I-ORG'), ('U', 'I-LOC'), ('K', 'I-LOC')]

NLTK – NER¶

This code tokenizes the text into sentences using the sent_tokenize() function, and then tokenizes each sentence into words using the word_tokenize() function. It then performs part-of-speech tagging on each word using the pos_tag() function. The resulting tagged words are then grouped into named entities using the ne_chunk() function.

import nltk

nltk_entities = [(" ".join(c[0] for c in chunk), chunk.label())

for sent in nltk.sent_tokenize(text)

for chunk in nltk.ne_chunk(nltk.pos_tag(nltk.word_tokenize(sent)))

if hasattr(chunk, "label")]

print("NLTK NER:", nltk_entities)

NLTK NER: [('Apple', 'GPE')]